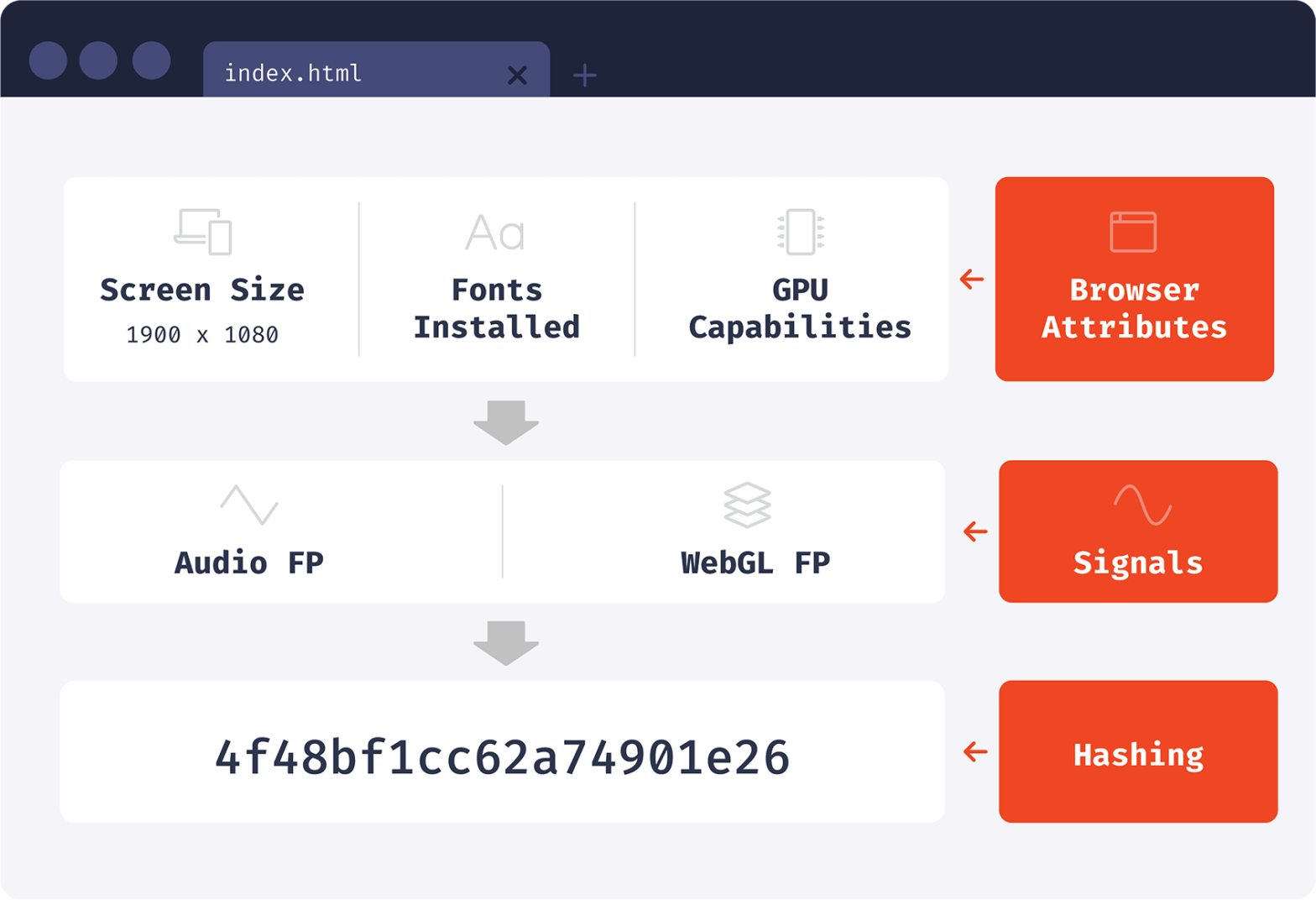

When generating a browser identifier, we can read browser attributes directly or use attribute processing techniques first. One of the creative techniques that we’ll discuss today is audio fingerprinting. Using an Oscillator and a Compressor they can basically calculate a specific number that identifies you. Every browser we have on our testing laptops generate a …

Continue reading “How the Web Audio API is used for browser fingerprinting”