CSS Anchor Positioning is a powerful tool, but one of the things that you cannot do natively (yet) is animating the position-area property. This blog post introduces a technique to animate position-area changes using View Transitions.

A rather geeky/technical weblog, est. 2001, by Bramus

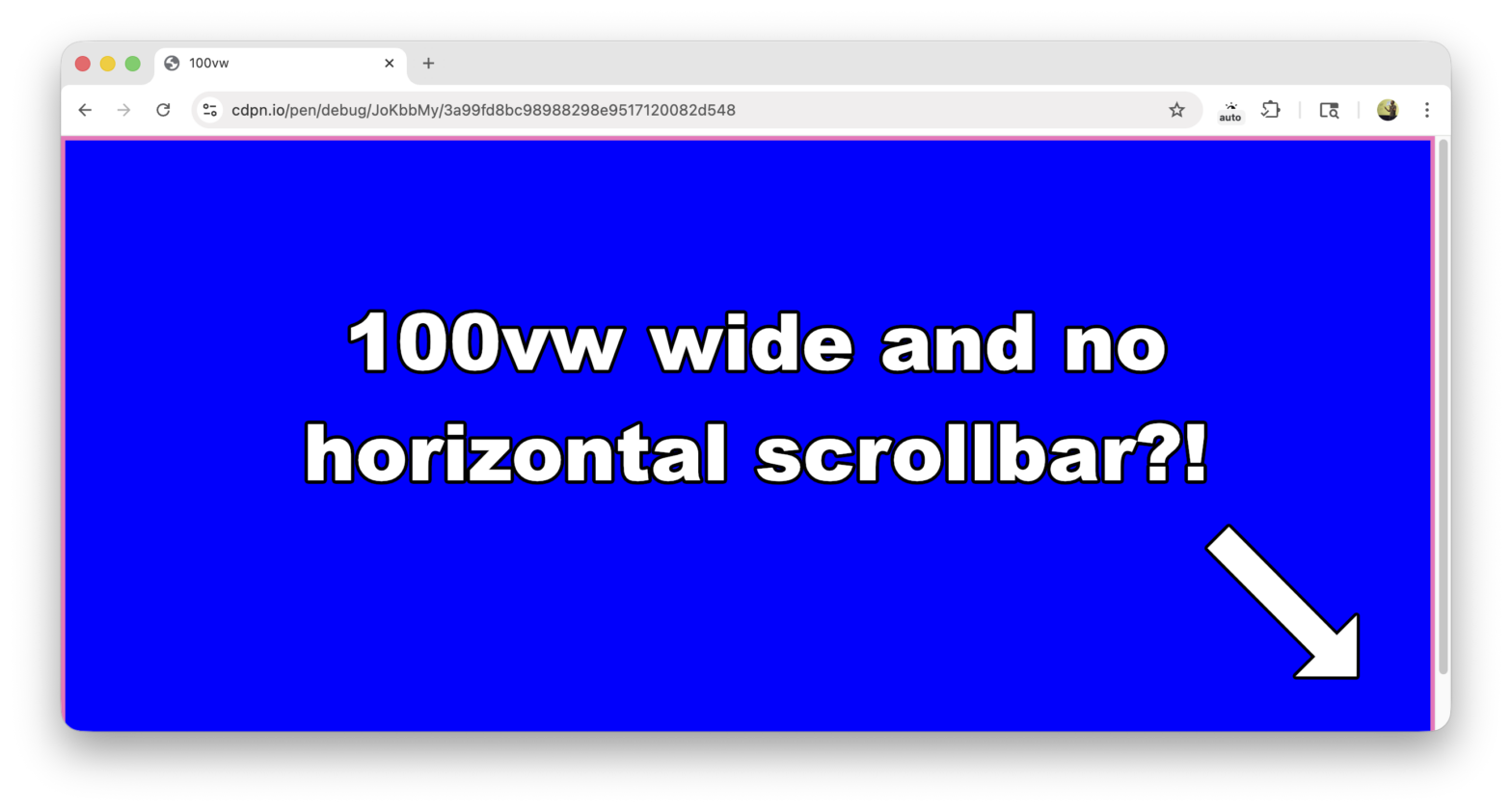

position-area with View Transitions100vw is now scrollbar-aware (in Chrome 145+, under the right conditions)

From Chrome 145 onwards, 100vw will automatically subtract the size of the (vertical) scrollbar from it if you have forced the html element to always show a vertical scrollbar (using overflow[-y]: scroll) or if you reserve space for it (using scrollbar-gutter: stable). The same applies to vh with a horizontal scrollbar, as well as all small, large, and dynamic variants.

rdar:// Bug ID

If you read the Safari release notes – like the Safari 26.2 release notes – you see a lot of trailing “(12345678)”-mentions in the list of fixed bugs. These numbers are Apple-internal bug IDs, as used within Apple’s internal bug tracker (fka?) named “Radar”.

These numbers are not linked to anything because Radar is Apple-internal, so to external people these numbers are practically useless … or are they?

overscroll-behavior: contain to prevent a page from scrolling while a <dialog> is open