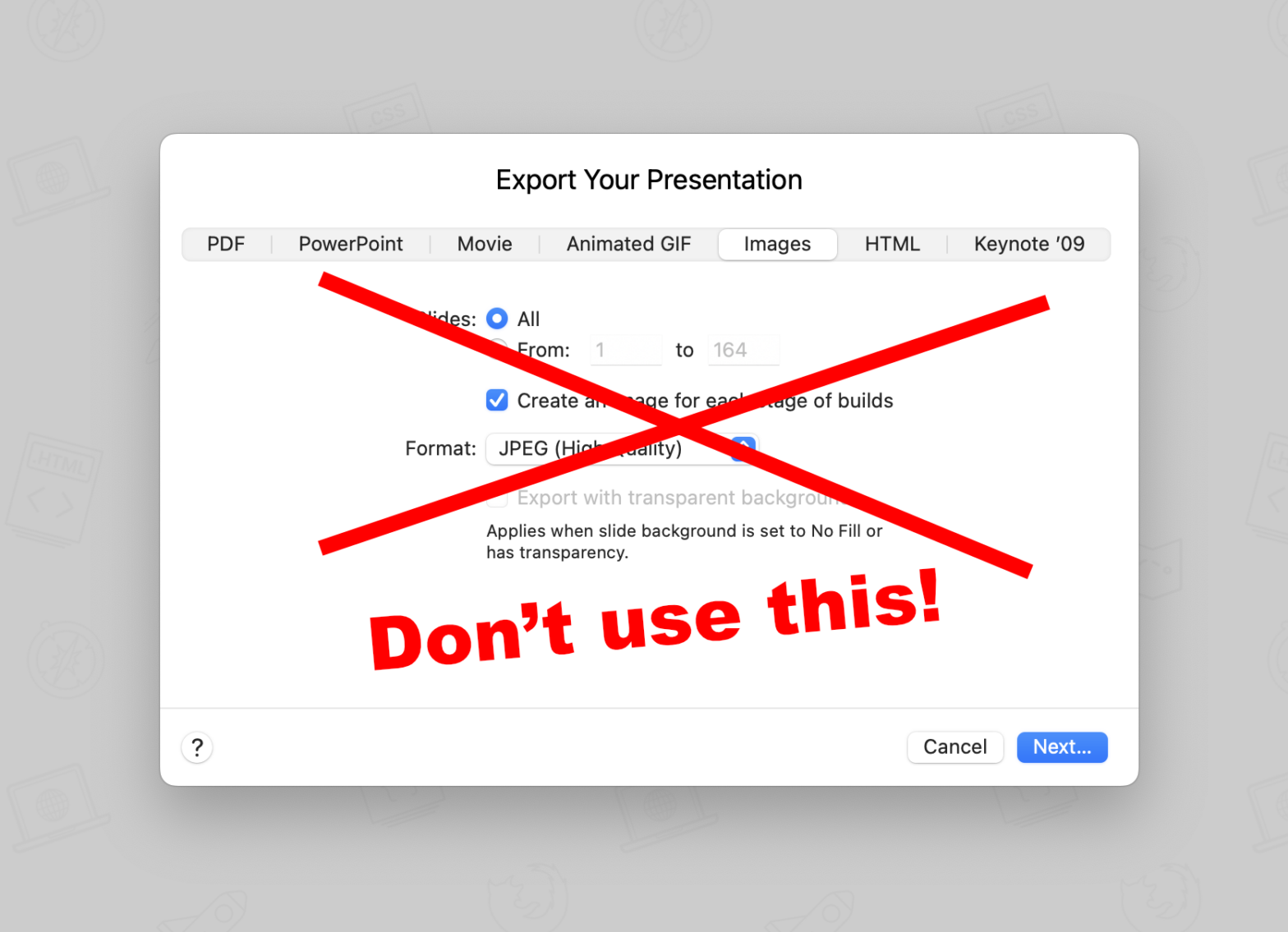

To create presentations I like to use Keynote, mainly thanks to its animation feature. I admit that it took me some time to get accustomed to it – and that not all is perfect – but I think I’ve become efficient at using it over time. The transitions and animations – such as seen in …

Continue reading “Convert a Keynote presentation to a set of hi-res images”