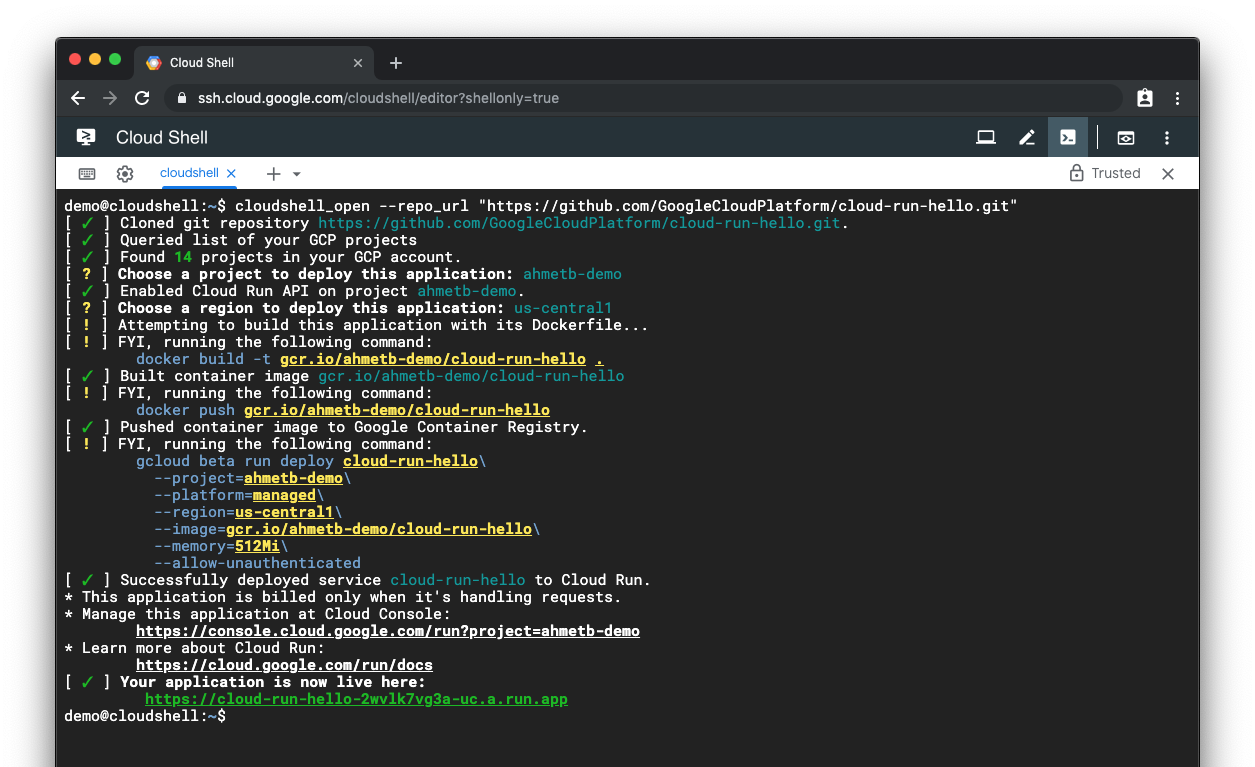

If you have a public repository with a Dockerfile you can have users automatically deploy the container to Google Cloud Run by adding a Cloud Run Button. It’s no more than an image that links to https://deploy.cloud.run, like so: [](https://deploy.cloud.run) Add that code to your README.md and when a visitor follows that …