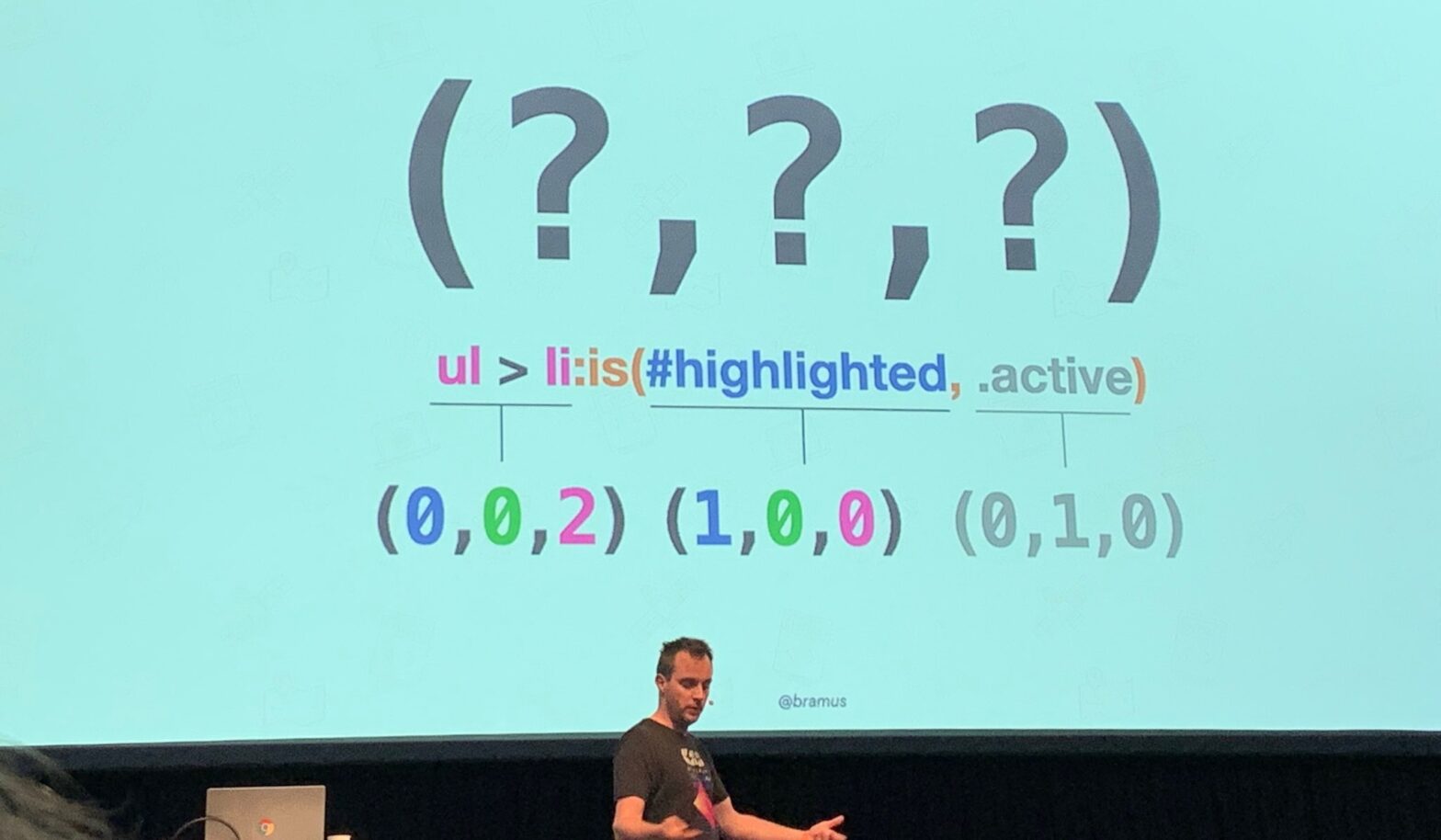

To remove some of the confusion, here’s a list of misconceptions about Specificity in CSS …

A rather geeky/technical weblog, est. 2001, by Bramus

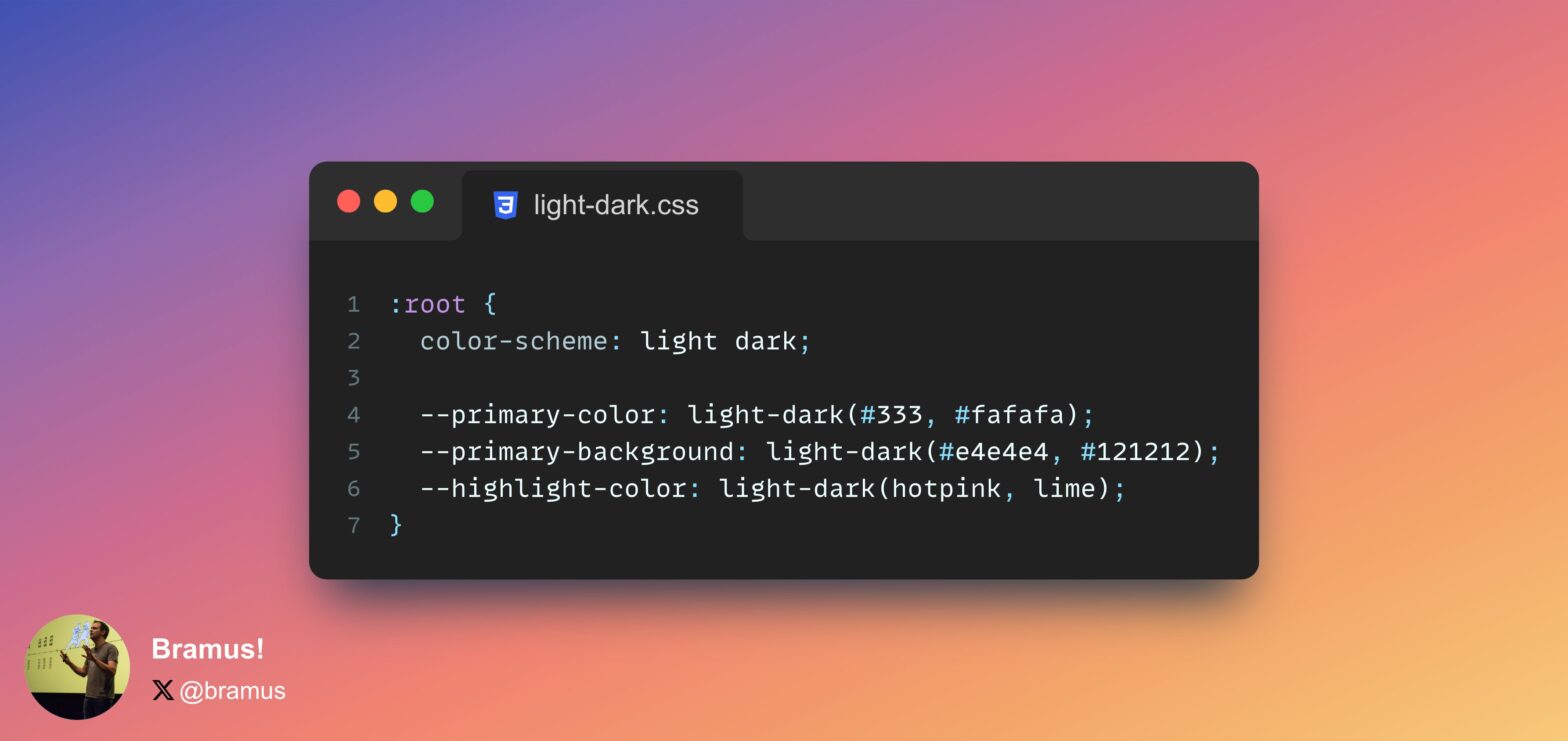

color-scheme-dependent colors with light-dark()

I’ve written about light-dark() before here on bram.us, and last month I also wrote an article for web.dev about it. The article takes a bit of a different approach, so it’s still worth a read even when you’ve seen my previous one before. System colors have the ability to react to the current used color-scheme …

Continue reading “CSS color-scheme-dependent colors with light-dark()“

::backdrop now inherits from its originating element

Screenshot of the demo featured in the article. In Chrome 122 the backdrop is light purple because it can access the custom property from the dialog element. If you ever struggled with ::backdrop not having access to custom properties, here’s some good news: As of Chrome 122 – and also in Firefox 120 and soon …

Continue reading “CSS ::backdrop now inherits from its originating element”

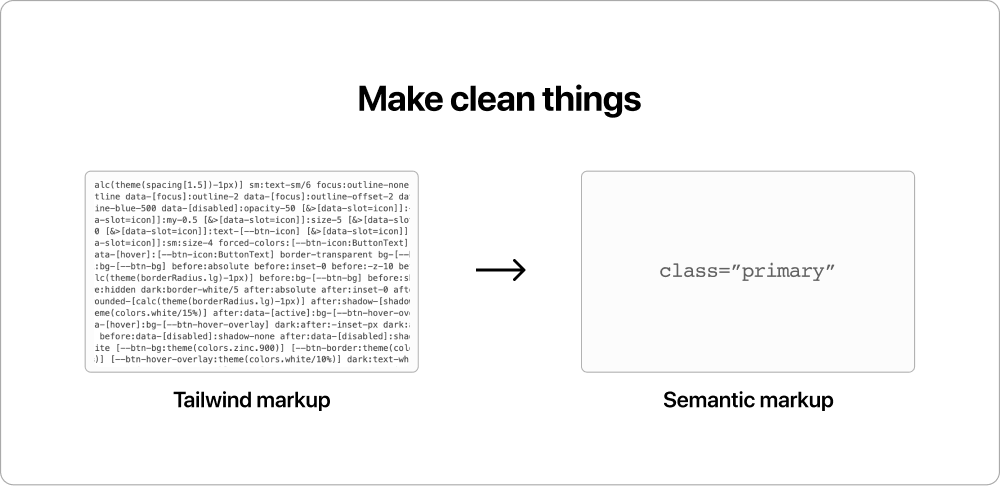

Nuanced piece by Tero Piirainen on where Tailwind took a good practice, twisted it around, and took it for a run. Use it or don’t, love it or hate it, but this last sentence of the article hits close to home: Learn to write clean HTML and CSS and stay relevant for years to come. …

Continue reading “Tailwind marketing and misinformation engine”