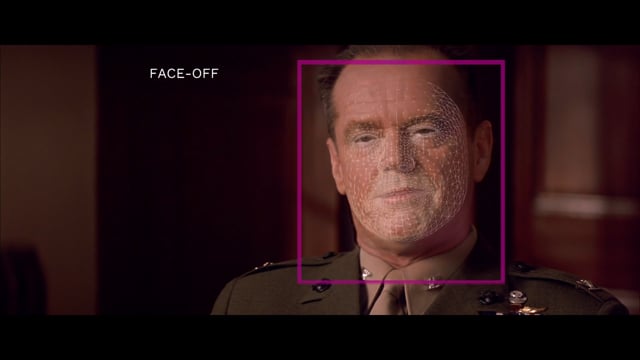

Now this is a good use of deepfake: correct the mouth and jaw movements of actors in dubbed video to match the new language track. The result is a (nearly) seamless lip-synced experience. TrueSync is the first AI offering from our neural network film lab. Filmmakers and content owners are now able to visually translate …

Continue reading “Using deepfake to create better Lip-Syncing”