Very cool project by Jo Francetti in which she created a live captioning service.

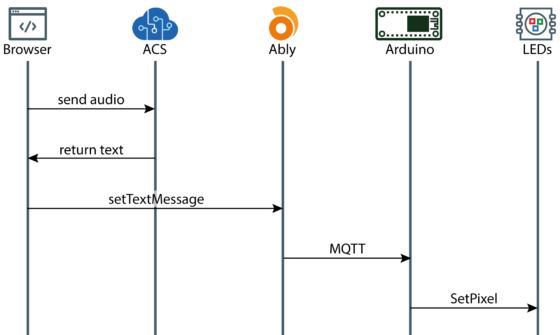

She uses a webpage on a phone to capture her speech — using getUserMedia() — which she then sends over to Azure Cognitive Services’ “Speech to Text” Service to get back the text. The text eventually ends up on the flexible LED display.

Making a wearable live caption display using Azure Cognitive Services and Ably Realtime →

💡 This looks like a perfect use case for the Web Speech API. Unfortunately that API is Chrome only right now …