The Web Perception Toolkit is an open-source library that provides the tools for you to add visual search to your website. The toolkit works by taking a stream from the device camera, and passing it through a set of detectors. Any markers or targets that are identified by the detectors are mapped to structured data on your site, and the user is provided with customizable UI that offers them extended information.

This mapping is defined using Structured Data (JSON-LD). Here’s a barcode for example:

[

{

"@context": "https://schema.googleapis.com/",

"@type": "ARArtifact",

"arTarget": {

"@type": "Barcode",

"text": "012345678912"

},

"arContent": {

"@type": "WebPage",

"url": "http://localhost:8080/demo/artifact-map/products/product1.html",

"name": "Product 1",

"description": "This is a product with a barcode",

"image": "http://localhost:8080/demo/artifact-map/products/product1.png"

}

}

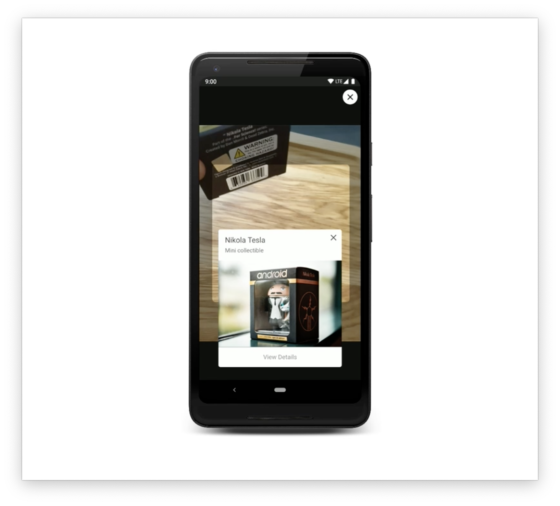

]When the user now scans an object with that barcode (as defined in arTarget), the description page (defined in arContent) will be shown on screen.

Next to BarCodes, other supported detectors include QR Codes, Geolocation, and 2D Images. ML Image Classification is not supported, but planned.

The Web Perception Toolkit: Getting Started →

Visual searching with the Web Perception Toolkit →

Web Perception Toolkit Repo (GitHub) →