When building Docker images locally it will leverage its build cache:

When building an image, Docker steps through the instructions in your

Dockerfile, executing each in the order specified. As each instruction is examined, Docker looks for an existing image in its cache that it can reuse, rather than creating a new (duplicate) image.

Therefore it is important that you carefully cater the order of your different Docker steps.

~

When using Google Cloud Build however, there – by default – is no cache to fall back to. As you’re paying for every second spent building it’d be handy to have some caching in place. Currently there are two options to do so:

- Using the

--cache-fromargument in your build config - Using the Kaniko cache

⚠️ Note that the same rules as with the local cache layers apply for both scenarios: if you constantly change a layer in the earlier stages of your Docker build, it won’t be of much benefit.

~

Using the --cache-from argument (ref)

The easiest way to increase the speed of your Docker image build is by specifying a cached image that can be used for subsequent builds. You can specify the cached image by adding the

--cache-fromargument in your build config file, which will instruct Docker to build using that image as a cache source.

To make this work you’ll first need to pull the previously built image from the registry, and then refer to it using the --cache-from argument:

steps:

- name: 'gcr.io/cloud-builders/docker'

entrypoint: 'bash'

args:

- '-c'

- |

docker pull gcr.io/$PROJECT_ID/[IMAGE_NAME]:latest || exit 0

- name: 'gcr.io/cloud-builders/docker'

args: [

'build',

'-t', 'gcr.io/$PROJECT_ID/[IMAGE_NAME]:latest',

'--cache-from', 'gcr.io/$PROJECT_ID/[IMAGE_NAME]:latest',

'.'

]

images: ['gcr.io/$PROJECT_ID/[IMAGE_NAME]:latest']~

Using the Kaniko cache (ref)

Kaniko cache is a Cloud Build feature that caches container build artifacts by storing and indexing intermediate layers within a container image registry, such as Google’s own Container Registry, where it is available for use by subsequent builds.

To enable it, replace the cloud-builders/docker worker in your cloudbuild.yaml with the kaniko-project/executor.

steps:

- name: 'gcr.io/kaniko-project/executor:latest'

args:

- --destination=gcr.io/$PROJECT_ID/image

- --cache=true

- --cache-ttl=XXhWhen using Kaniko, images are automatically pushed to Container Registry as soon as they are built. You don’t need to specify your images in the images attribute, as you would when using cloud-builders/docker.

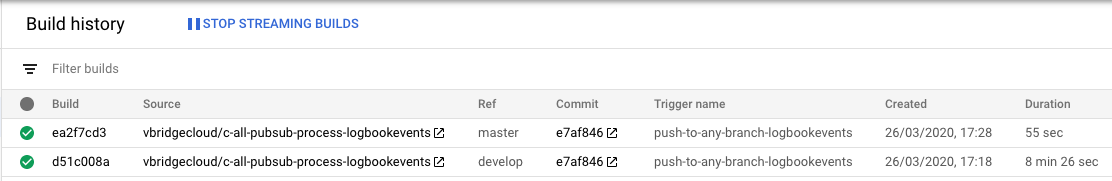

Here’s a comparison of a first and second run:

From +8 minutes down to 55 seconds by one simple change to our cloudbuild.yaml 🙂

~

Thank me with a coffee.

I don\'t do this for profit but a small one-time donation would surely put a smile on my face. Thanks!

☕️ A thank you coffee for saving build time (€4)

To stay in the loop you can follow @bramus or follow @bramusblog on Twitter.

Leave a comment